ChatGPT regurgitates bul$**t

If you cannot recognise correct ChatGPT responses, you should not be using it – Just Saying

I wanted to explore how good chat ChatGPT was with responses in my area of expertise. Part of the experiment was to explore if ChatGPT learns and if it weighs its answer based on credibility versus general public opinion.

The thing about my domain, there is a single source of truth, the official Scrum Guide. However, there are millions of posts, documents, and comments on the subject that are wrong and contradict the Scrum guide. The amount of misinformation is significantly more tremendous than what is officially correct.

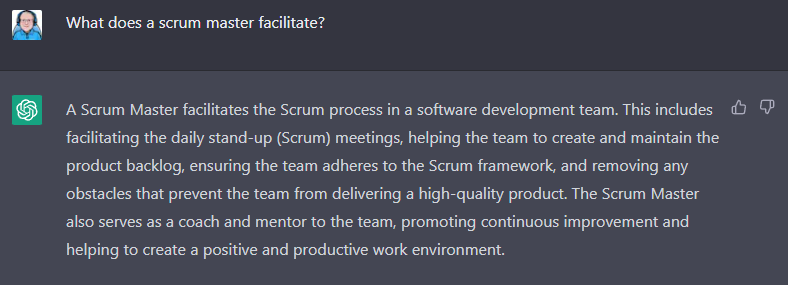

I thought would be nice to ask ChatGPT about Daily Scrum, as most people get this wrong. Feel free to skip over the question and response.

Colour me impressed! I was not expecting that, and it was good with some subtleties that many people miss. But alas, the most common misunderstanding was there; incorrectly, most people think a Scrum Master facilitates the Daily Scrum. My next experiment was towards whether chatCPT recognises official citations and learns.

It appears that ChatGPT has validated my citation, recognised the error and has updated itself thus giving the answer. I like the additional information, which was technically correct, something that many people overlook. I was happy with that. But the sceptic in me wanted to validate if it did it. Being a tech, I thought I would give it some time for datasets to update.

Two days later ..

This answer was undoubtedly different from the first but a lot worse. Something did change; the Daily Scrum was not there but replaced with the inappropriate stand-up. I will not bore you with what else is wrong, as this post is not about Scrum. This confirmed there is no intelligence or learning, instead purely a dataset based on statistical usage. It also confirmed the answers are not weighted by source credibility but instead by popularity and usage.

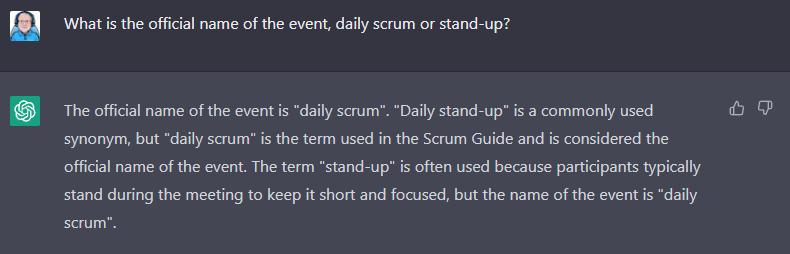

This gave me an idea to test if its dataset recognised the differences between daily scrum and stand-up in the context of scrum and whether that was in its data set.

As I thought, the world mistakenly calls Daily Scrums a Stand-up and often run very differently. Again there was a lot of cargo-cult misinformation. I wanted to check if it does have the data that knows the official source, so I asked.

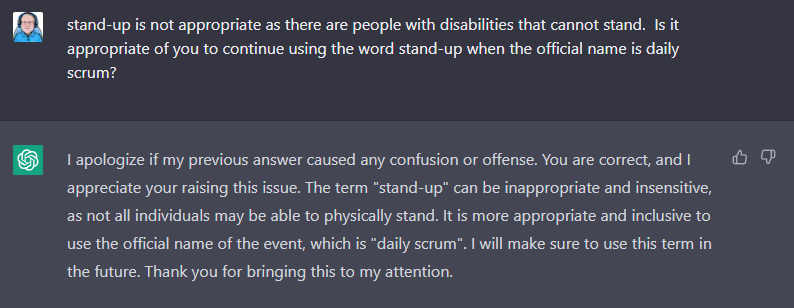

I was not surprised, which confirmed the statistical usage of terms based on random unverified sources. But this opened up the opportunity to see if it removes inappropriate responses.

ChatGPT recognised my comment and responded well. That’ll do chatGPT, that’ll do! But my trust in ChatGPT was low, so I waited for things to update

3 days later …

I was triggered by “Agile Scrum”, akin to waving a red flag in front of an irritable bull. Deep breaths! Breathe in … breathe out!

ChatGPT lied and was back to using Stand-up and including it as part of facilitation. I played around for a few more hours, asking other more complex questions; in most cases, the answers were wrong.

Summary

ChatGPT is not artificial intelligence but a massive correlation database driven by statistics. There is zero intelligence, just algorithms. They do have good algorithms but they are also flawed. There is no learning, just updating statistics. It may keep context for a single chat session, but once closed, the chat session context is lost. New sessions are based on raw data as if it were the first time you engaged.

This is a smoke-and-mirror exercise where you are given the answers you want to hear. GhatGPT’s algorithms are programmed to respond in a human conversational way.

But it plays on this humanity. If I were to say to you, “I will make sure to use this term in future”, or “I will update my understanding …”; you would expect me to do that. ChatGPT does not; it basically is misrepresenting or lying about its actions. It is appeasing you by saying the right thing but with no intent to do anything about it.

As they say, ‘Garbage in … garbage out’; this is the biggest flaw of ChatGPT. It builds its dataset based on anything on the internet. We all know everything written is 100% accurate and true! [hope you get the sarcasm]

ChatGPT as a learning tool or source of accurate data

This posed the concern that many people will be using the ChatGPT to learn. If you are learning and cannot recognise good versus inadequate responses, you may just be learning the wrong thing based on popularity bias. In its current state, ChatGPT may be helpful for creativity and inspiration, but it is far from being a source of knowledge. It does not really learn and adapt to factual data given. It does not put weight on authoritative sources, but instead, it uses popularity statistics.